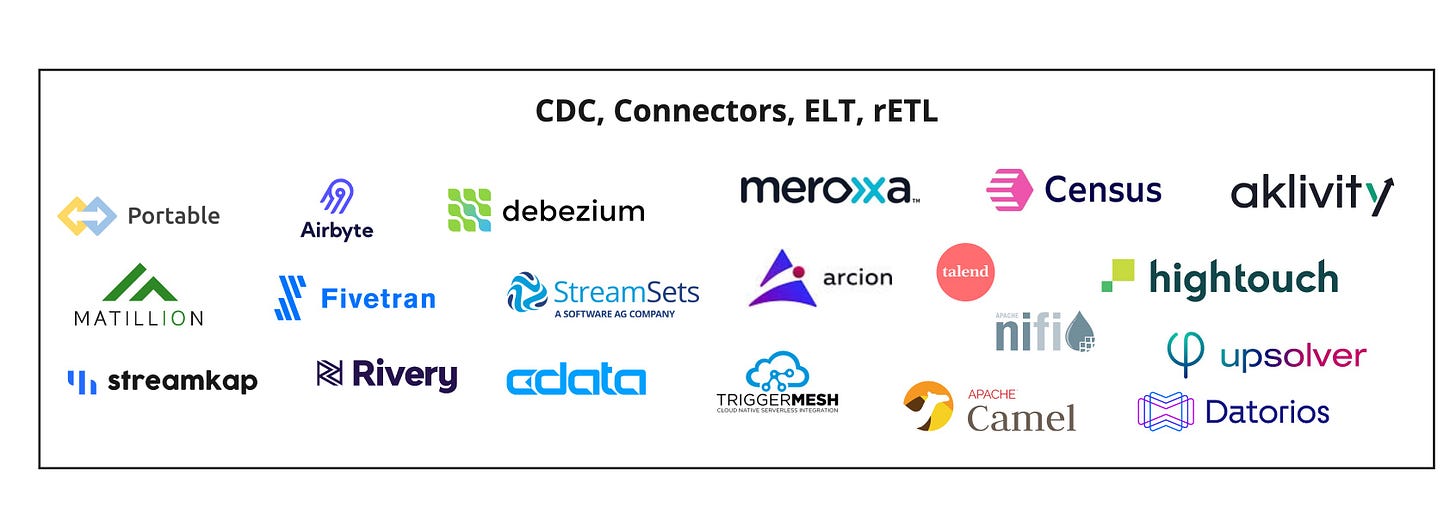

This post will cover connectors, change data capture (CDC), ELT, and rETL solutions and providers. Below is the current list of tools that fall into this category.

This is Part 2 of a multi-post series that will cover the real-time ecosystem. If you haven’t yet, read Part 1 of this series to get a description of the use case.

Keep reading with a 7-day free trial

Subscribe to SUP! Hubert’s Substack to keep reading this post and get 7 days of free access to the full post archives.